Reinforcement learning (RL) is transforming cryptocurrency trading by enabling systems to make smarter trading decisions. Unlike static rules or traditional machine learning, RL learns through trial and error to maximize long-term returns. It’s particularly effective in crypto markets, which are highly volatile and operate 24/7.

Key Takeaways:

- RL agents learn to trade by interacting with the market and refining their decisions (Buy, Sell, Hold).

- Algorithms like Deep Q-Networks (DQN) and Proximal Policy Optimization (PPO) have shown strong performance, often outperforming simpler strategies like moving averages or buy-and-hold.

- RL models consider trading fees, risk management, and market conditions, optimizing for metrics like returns and Sharpe ratios.

- Advanced setups include using historical price data, technical indicators, and portfolio details as inputs for the RL agent.

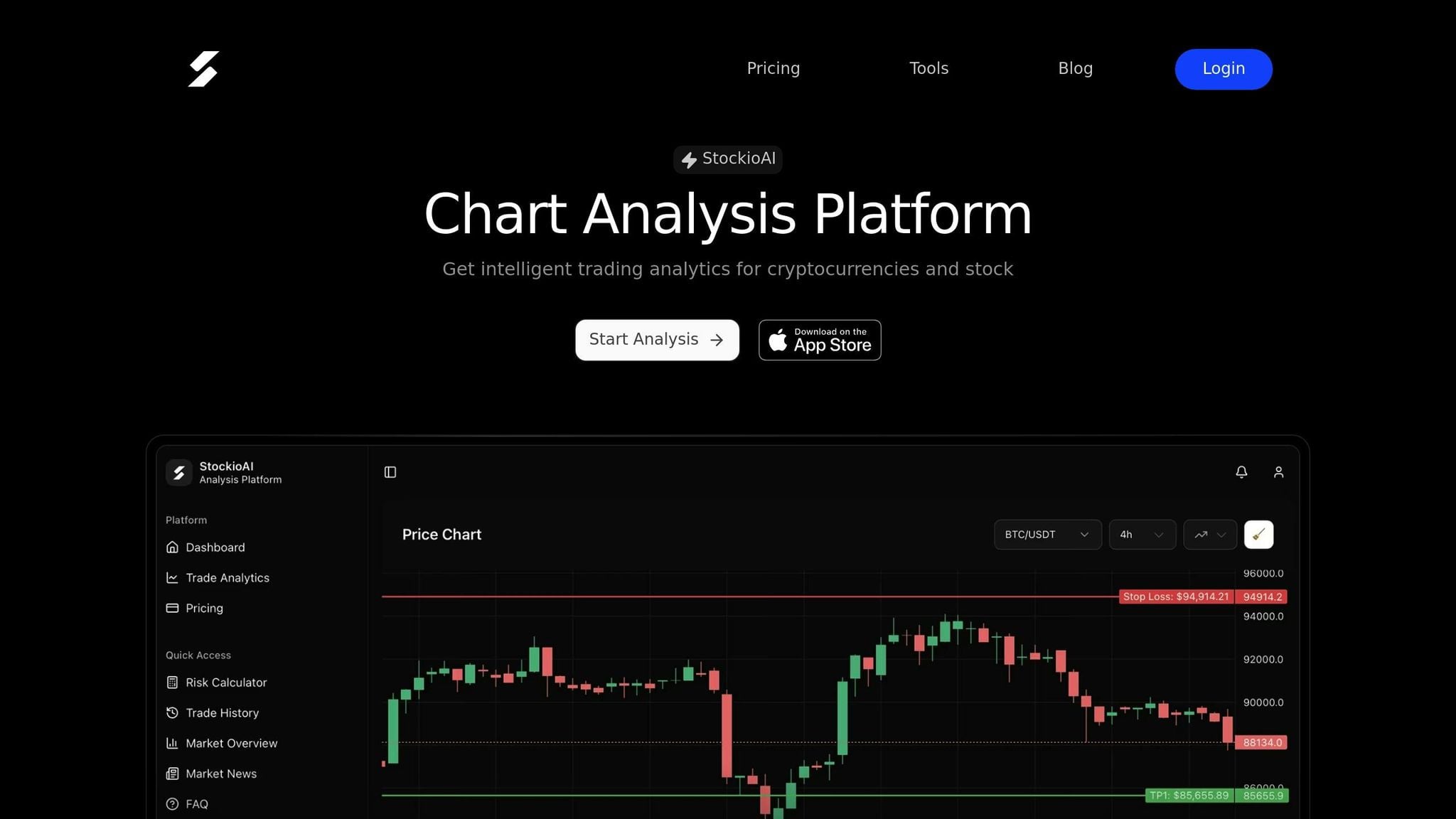

- Tools like StockioAI can complement RL strategies by providing real-time signals, confidence scores, and multi-timeframe analysis.

RL is reshaping how traders approach crypto markets, offering a dynamic way to generate signals that adapt to changing conditions while managing risk and costs. The article dives deeper into core concepts, algorithms, and implementation strategies for RL in crypto trading.

How to Train AI to Day Trade Crypto with FinRL and Python

Core Concepts of Reinforcement Learning in Trading

Reinforcement learning (RL) operates on a simple yet powerful principle: act, observe, learn, and improve. In the context of crypto trading, the agent - often a neural network - learns to make profitable trades by interacting with the market. The environment is the crypto market itself, responding to the agent’s actions with price changes, transaction fees, and portfolio adjustments. The agent observes states (snapshots of market conditions) and chooses actions like buying, selling, or holding. After each action, the environment provides a reward - a measure of how effective that decision was in generating profit.

Agents, Environments, States, and Actions

A state in crypto trading captures the market’s current condition. It typically includes normalized historical price data over a rolling window (e.g., the last 50 timesteps), technical indicators like RSI and MACD, order book snapshots, portfolio details, and transaction costs. To make sense of this complex data, tools like convolutional neural networks (CNNs) and autoencoders extract patterns, enabling the agent to navigate Bitcoin’s rapid price swings and the unpredictable nature of crypto markets [2].

Actions represent the trading decisions the agent can make. These range from simple choices - like buy, sell, or hold - to more nuanced decisions, such as adjusting portfolio allocations across multiple assets. For example, an agent might allocate 40% of its portfolio to Bitcoin and 60% to Ethereum, ensuring the total allocation equals 100%. Research highlights that frequent trading often leads to losses due to accumulated transaction fees [2][3]. Understanding these dynamics is critical for designing effective reward systems and decision-making strategies.

Rewards and Policies in Crypto Trading

The reward serves as feedback, signaling whether an action was beneficial. In crypto trading, rewards are commonly tied to profit or loss, often calculated as log-returns (rₜ = log(Pₜ / P₍ₜ₋₁₎)), changes in portfolio value after accounting for fees, or risk-adjusted measures like the Sharpe ratio. Some reward systems also penalize excessive trading or high-risk behavior, encouraging agents to focus on maximizing returns while managing volatility [2][3].

A policy defines the agent’s strategy - a set of rules mapping market states to actions. Agents may follow a stochastic policy, selecting actions probabilistically, or a greedy policy, always choosing the action with the highest expected value. Techniques like actor-critic methods continuously refine these policies to maximize cumulative rewards. Greedy policies, in particular, have shown strong performance during validation tests, helping agents make precise entry and exit decisions in volatile crypto markets [2][7].

Markov Decision Processes (MDPs) in Crypto Markets

At the heart of reinforcement learning in trading lies the Markov Decision Process (MDP). This framework is defined by states (S), actions (A), transition probabilities (P), rewards (R), and a discount factor (γ). The Markov property assumes that future outcomes depend only on the current state, not past ones. This makes MDPs particularly well-suited for the ever-changing crypto market, where agents can observe states (like price matrices), perform actions (trades), collect rewards (e.g., log-returns), and transition to new states while refining their strategies [2].

MDPs handle crypto’s volatility by using state representations that account for temporal patterns, such as rolling price windows and technical indicators. Flexible reward functions, which penalize risk and excessive trading, help agents adapt to shifting market conditions. Advanced techniques like rolling retraining and dynamic reward adjustments further enhance adaptability. Research shows that RL agents trained on specific cryptocurrencies can generalize to new assets and maintain strong performance, even during market downturns. For instance, one agent trained on 50-timestep price matrices across multiple coins achieved an average 8% return per coin after 10 million training timesteps, with 7% returns on unseen validation data - all while accounting for 0.055% transaction fees [2][3]. Up next, we’ll explore how RL algorithms apply these principles to optimize trading signals.

RL Algorithms for Crypto Signal Optimization

Once the Markov Decision Process (MDP) framework is in place, the next step is to choose the right algorithm for generating trading signals. Two major types dominate this field: value-based methods like Deep Q-Networks (DQN) and policy-gradient methods such as Proximal Policy Optimization (PPO). Each has its strengths, and blending them often delivers more consistent results across varying market conditions. Let’s dive deeper into these reinforcement learning (RL) algorithms and how they refine crypto signal generation.

Deep Q-Networks (DQN) and Its Variants

DQN focuses on learning an action-value function, Q(s, a), which estimates the potential return of taking a specific action - buy, sell, or hold - in a particular market state. The agent selects the action with the highest Q-value at each step, making it a solid choice for discrete trading decisions. A typical crypto DQN setup might analyze 50 one-minute BTC-USD candles, 10 technical indicators (like RSI and MACD), current inventory levels, and unrealized profit/loss to calculate Q-values for possible actions. Rewards are usually tied to the change in portfolio value after each action, minus transaction costs - commonly around 0.02–0.10% per trade on U.S. exchanges.

Advanced variants like Double DQN address overestimation issues by separating action selection from value evaluation, offering better performance in noisy crypto markets. Meanwhile, Dueling DQN splits the network into two components: one estimates the overall state value, and the other calculates the advantage of specific actions. This design helps the agent differentiate between favorable and unfavorable market conditions without overfitting to noise. Many RL-based trading systems utilize these techniques, with some studies reporting average training returns of approximately 7–8% per coin on test data when rewards are designed around per-coin percentage gains.

Proximal Policy Optimization (PPO) for Consistent Training

Unlike DQN, PPO directly learns a stochastic policy, π(a|s), which outputs action probabilities rather than estimating Q-values. It uses a clipped objective function to limit large policy updates, ensuring stability in volatile markets. For example, PPO restricts probability changes (e.g., from 0.9 to 1.1), preventing the model from overreacting to short-term market fluctuations or outliers.

PPO is particularly effective for more complex trading scenarios, such as variable position sizing, frequent portfolio rebalancing, or managing multi-asset portfolios. Reward shaping in PPO often incorporates metrics that balance risk and return, like volatility-adjusted gains or ratios similar to Sharpe and Sortino. Penalties for large losses or excessive trading turnover help refine performance further. For U.S.-based traders, tweaking the reward function to discourage frequent short-term trades can simulate tax impacts, nudging the agent toward longer holding periods. Additionally, PPO uses mini-batch updates with optimizers like Adam, running multiple epochs per batch to improve sample efficiency while maintaining stable learning through its clipping mechanism.

Combining Multiple RL Models

To capitalize on the strengths of individual models, combining them into ensembles can boost overall performance. For example, merging DQN, Dueling DQN, and PPO through weighted voting or hierarchical decision-making can improve reliability across both bullish and bearish markets while reducing the risk of overfitting. Some advanced strategies even use candlestick images as inputs, effectively capturing both temporal and spatial patterns, which raw numerical data might miss.

In an ensemble, different models can specialize in specific market conditions: DQN is highly efficient for discrete decision-making, while PPO thrives in dynamic, nonstationary environments. Before deploying these systems live, rigorous overfitting tests - such as hypothesis-based rejection of backtest results - are essential to filter out underperforming agents. To keep the ensemble adaptive, rolling retraining on data from the last three to twelve months helps align the models with evolving market trends. Adding penalties for drawdowns and transaction costs further sharpens risk-adjusted signal generation, ensuring the system remains practical for real-world trading scenarios.

Building RL Environments for Cryptocurrency Signals

Defining State and Action Spaces

The state space is the backbone of any reinforcement learning (RL) environment, as it encapsulates all the critical information an agent needs to make trading decisions. When designing an RL environment for cryptocurrency trading, start with normalized OHLCV (Open, High, Low, Close, Volume) data over a 50–100 timestep window. To enhance the agent's ability to detect market trends, include technical indicators like RSI, MACD, Bollinger Bands, and moving averages. You can further enrich the state space by adding portfolio details such as current holdings, cash balance, and unrealized profit or loss. For more advanced environments, features like order book data and transaction costs can provide additional depth.

For the action space, you can choose between a discrete setup (buy, sell, or hold) or a continuous one, depending on your trading strategy. Continuous action spaces, especially for managing multiple cryptocurrencies, allow for portfolio weight allocations where the weights sum to 1.0 across all assets. This approach offers greater flexibility and adaptability. For example, one study utilized a CNN-input matrix of normalized historical prices for multiple cryptocurrencies over a 50-step window, with actions defined as portfolio weights. By carefully defining these state and action variables, you lay the groundwork for an effective RL trading environment.

Reward Functions for Crypto Trading

Reward functions are a critical component of RL systems, as they guide the agent toward profitable decisions. A common choice is log-returns, calculated as ( r_t = \log(P_t / P_

A well-rounded reward function might combine multiple factors, such as profit targets, drawdown penalties, and trading costs, into a single equation:

[ r = w_1 \cdot \text

Here, the weights ((w_1, w_2, w_3)) can be adjusted based on your risk appetite. Adding penalties for sharp losses during market downturns can further refine the agent's behavior, especially in unpredictable markets. Dynamic reward parameterization - where weights adapt to changing market conditions - often yields better results than focusing solely on maximizing returns. Establishing a robust reward system is a crucial step before addressing the challenges of overfitting.

Avoiding Overfitting in Backtesting

Even with a well-designed state, action, and reward setup, overfitting remains a significant challenge when transitioning RL models to live trading. To combat this, consider rolling-window retraining, using data from the last three to twelve months to keep the model aligned with current market trends. Techniques such as experience replay, combined with target networks, L2 regularization, and dropout, can help prevent the agent from memorizing training data. For optimization, mini-batch Adam with batch sizes around 50 and learning rates of (10^

To ensure the model generalizes well, test it on unseen cryptocurrencies and across diverse market conditions, including crashes. For instance, one evaluation of a Double Dueling DQN agent with Prioritized Experience Replay showed it outperformed a Buy-and-Hold strategy by an average of 30% over 50 episodes, even with 0.1% trading fees. The results achieved a p-value of 0.000006, rejecting the null hypothesis that Buy-and-Hold performs better. Use techniques like cross-validation and walk-forward optimization to align out-of-sample performance with training results. Aim for average rewards in the range of 0.07–0.08 to validate the model’s reliability.

Using StockioAI with RL-Based Trading Strategies

StockioAI's AI-Powered Trading Signals

StockioAI provides real-time Buy, Sell, and Hold signals that align seamlessly with reinforcement learning (RL) trading actions. These signals can be particularly useful when training models like Deep Q-Networks (DQN) or Proximal Policy Optimization (PPO). By integrating StockioAI's signals into state representations or ensemble inputs, you can enhance your agent's decision-making process. The platform processes over 60 real-time data points per second - covering sentiment analysis and whale movements - to deliver precise entry recommendations, complete with timing, target prices, and confidence scores.

These confidence scores can be a valuable tool for refining your RL strategies. For instance, if a DQN assigns Q-values to buy, sell, or hold actions, you could weigh those outputs against StockioAI's confidence levels to minimize false signals, especially during uncertain market conditions. Additionally, StockioAI's risk-reward metrics and stop-loss suggestions can help fine-tune reward functions, encouraging trades with higher conviction while managing potential losses. This integration merges external market insights with RL decision-making, paving the way for more advanced multi-timeframe and market regime analyses.

Multi-Timeframe Analysis

StockioAI's multi-timeframe analysis adds a crucial layer of validation by comparing short-term signals with broader market trends. This feature ensures your RL agent doesn't overreact to short-term noise that contradicts larger market movements. For example, if your RL agent operates on 15-minute states but StockioAI's higher timeframe analysis identifies a downtrend, you can adjust or penalize buy actions during training. Incorporating these multi-timeframe signals as features in your policy network can improve your model's robustness.

Additionally, the platform's integrated risk calculators make backtesting more realistic by enabling you to simulate trades with accurate position sizing. This holistic approach helps your RL agent maintain consistency across varying timeframes, reducing the likelihood of overfitting to short-term fluctuations.

Market Regime Classification with StockioAI

Understanding the market environment - whether it's trending, ranging, or volatile - is critical for optimizing RL model performance. StockioAI combines AI-driven pattern recognition with technical indicators like Bollinger Bands, RSI, and volume analysis to classify market regimes. For instance, when tight Bollinger Bands and an RSI between 40–60 are detected, StockioAI might flag a ranging market, suggesting that aggressive trading strategies may not be ideal.

These regime indicators can be integrated as additional state features or used to dynamically adjust your reward function, making your RL model more adaptable. During volatile periods, marked by sharp price movements and high trading volumes, you could increase penalties for drawdowns or shift toward more conservative Hold actions. This flexibility helps your model perform well across varying market conditions while reducing overfitting. Tools like Volume Profile and Order Flow Analysis further enhance your RL agent's market understanding by offering insights into institutional activity, providing a deeper context beyond price data alone.

Implementation and Backtesting of RL-Based Crypto Strategies

This section explains how to implement and backtest reinforcement learning (RL) strategies for cryptocurrency trading, turning theoretical ideas into practical systems.

Data Preprocessing and Feature Engineering

Start by gathering high-frequency historical price data for cryptocurrencies like BTC/USD and ETH/USD from exchanges such as Binance. Use OHLCV data (Open, High, Low, Close, Volume) at 1-hour intervals. To ensure clean data, fill in any missing values and remove anomalies like price spikes exceeding 5 standard deviations.[2][4]

Next, normalize the prices and returns to have a zero mean and unit variance, addressing the non-stationary nature of crypto markets. Add technical indicators such as the Relative Strength Index (RSI) (14-period), Moving Average Convergence Divergence (MACD) (12/26-period EMA difference with a 9-period signal line), and Exponential Moving Average (EMA) (20-period). Combine these with raw prices, trading volume, and the rolling standard deviation of log-returns to create a feature-rich input matrix. Use rolling windows of 50–100 timesteps to capture market trends over time.[2][9]

For more advanced feature engineering, include Bollinger Bands (20-period SMA ± 2σ), sentiment scores derived from social media, time-of-day indicators, and transaction costs (usually 0.1–0.2% on crypto exchanges). These additions help create a training environment that mirrors real-world trading conditions.[2][4]

Once the data is clean and features are prepared, you’re ready to configure and train RL models.

Training and Evaluating RL Models

Use OpenAI Gym-style frameworks to set up a training environment with the preprocessed BTC/ETH data. For Deep Q-Networks (DQN), include experience replay with a buffer of 1 million transitions, update the target network every 10,000 steps, and use the Adam optimizer with a learning rate of 0.0001. Train in batches of 32–64 over 5–10 million timesteps. Meanwhile, for Proximal Policy Optimization (PPO), use clipped surrogate objectives, add entropy regularization (0.01), and run 4–8 parallel actors to handle the volatility of crypto markets.[2][3][5]

Evaluate the models using metrics like cumulative return, Sharpe ratio (aim for values above 1.5), maximum drawdown (keep it under 20%), and win rate (target above 55%). Backtesting results suggest that DQN variants achieve 7–10% per-trade returns on BTC, while PPO delivers around 8% returns with lower drawdowns (about 15%, compared to DQN’s 25%).[2][3][5]

To improve reliability, use rolling-window retraining. Train the models on 2022–2023 data and validate them on 2024 data. Apply techniques like L2 regularization (0.0001), dropout (0.1–0.2), and early stopping based on out-of-sample Sharpe ratios.[2][4]

For example, one study showed that a Double Dueling DQN agent with 0.1% trading fees outperformed the Buy-and-Hold strategy by 30% over 50 evaluation episodes, with a statistically significant p-value of 0.000006.[8]

These steps ensure your models are thoroughly tested and ready for live trading.

Deploying RL Models for Live Trading

Before live deployment, test the models in a paper-trading environment for 1–3 months using real-time market feeds. Account for realistic slippage (0.05–0.5%) and maintain system latency below 100 milliseconds. Use conservative position sizing, risking only 1–2% per trade. Implement kill-switches to halt trading if drawdowns exceed 10%.[2][4]

Retrain the models weekly with updated data to adapt to evolving market conditions. Tools like StockioAI’s regime detection and multi-timeframe validation can enhance live performance. For instance, these tools have been shown to improve Sharpe ratios by 20–30% compared to standalone RL models. StockioAI analyzes over 60 real-time data points per second and provides automated crypto insights every 4 hours, helping you stay ahead of market changes.[1]

Monitor the live models using the same metrics as backtesting - Sharpe ratio, maximum drawdown, and win rate. Additionally, track execution quality by measuring actual slippage and latency. If performance metrics drop significantly, adjust the reward function or revert to paper trading to reassess the strategy.

This iterative approach ensures that your trading system remains reliable and responsive to the fast-changing crypto market.

Conclusion

Reinforcement learning (RL) is reshaping cryptocurrency trading by enabling systems to develop strategies that adapt to the unpredictable and ever-changing nature of these markets. Unlike static buy-and-hold approaches, RL models improve through trial and error, often achieving returns that stand out in comparison [2]. These techniques naturally lend themselves to practical trading strategies and seamless integration into real-world systems.

To successfully deploy RL in trading, it's crucial to structure rewards carefully and conduct thorough backtesting. This means accounting for realistic trading fees, penalizing large drawdowns, and using rolling-window backtesting to ensure models perform well in diverse market conditions. Combining multiple RL algorithms through ensemble methods has shown resilience in both bullish and bearish market phases [6].

Building on these foundational principles, tools like StockioAI take RL strategies to the next level. By analyzing over 60 data points per second and generating automated crypto insights every four hours, StockioAI enhances the training environment with richer inputs. This helps RL agents adapt to shifting market dynamics. When RL-powered signals are paired with StockioAI's real-time analytics, the results speak volumes - traders report a 20–30% improvement in Sharpe ratios compared to using RL models alone [1]. Advanced features like regime detection and multi-timeframe analysis further refine reward functions and stabilize trading strategies.

For those starting with RL, it's wise to begin with straightforward models like DQN or PPO, using historical BTC/ETH data. Factor in realistic trading fees and slippage, and validate your approach through out-of-sample testing. Begin with paper trading for 1–3 months, risking only 1–2% per trade, before transitioning to live trading.

The future of cryptocurrency trading lies in hybrid systems that merge RL's adaptive learning capabilities with the real-time intelligence of AI platforms. As markets grow more intricate and competitive, traders who combine algorithmic precision with disciplined risk management will be best equipped to achieve steady returns in this fast-paced environment.

FAQs

What makes reinforcement learning different from traditional crypto trading strategies?

Reinforcement learning takes a different approach compared to traditional crypto trading strategies by using AI algorithms that learn and evolve through trial and error. Instead of sticking to fixed rules or relying on static indicators, it adapts in real time to shifting market conditions, fine-tuning its decisions to identify the best trading opportunities.

This makes it especially effective in the ever-changing world of cryptocurrency, where conventional strategies often fall short. By learning from the outcomes of previous trades, reinforcement learning can spot patterns and opportunities that might be missed through more rigid methods.

How do algorithms like DQN and PPO benefit cryptocurrency trading?

Reinforcement learning algorithms like DQN (Deep Q-Network) and PPO (Proximal Policy Optimization) are transforming cryptocurrency trading by refining how decisions are made. These models adapt to market shifts, learning from fluctuations to help traders fine-tune their strategies and improve outcomes over time.

By identifying patterns and responding to evolving conditions, these algorithms aim to balance risk management with the pursuit of higher returns. They empower traders to make smarter, data-driven choices, proving to be essential tools in the fast-paced and unpredictable world of cryptocurrency trading.

How does StockioAI improve reinforcement learning strategies for cryptocurrency trading?

StockioAI brings a fresh edge to cryptocurrency trading by leveraging AI to deliver real-time insights. It provides tools like technical analysis, market sentiment tracking, and pattern recognition to help traders respond effectively to ever-changing market conditions.

With features such as clear Buy, Sell, and Hold signals and advanced risk management tools, StockioAI equips users to fine-tune their trading strategies. These insights enable traders to handle market volatility more confidently and make more precise decisions on when to enter or exit trades.