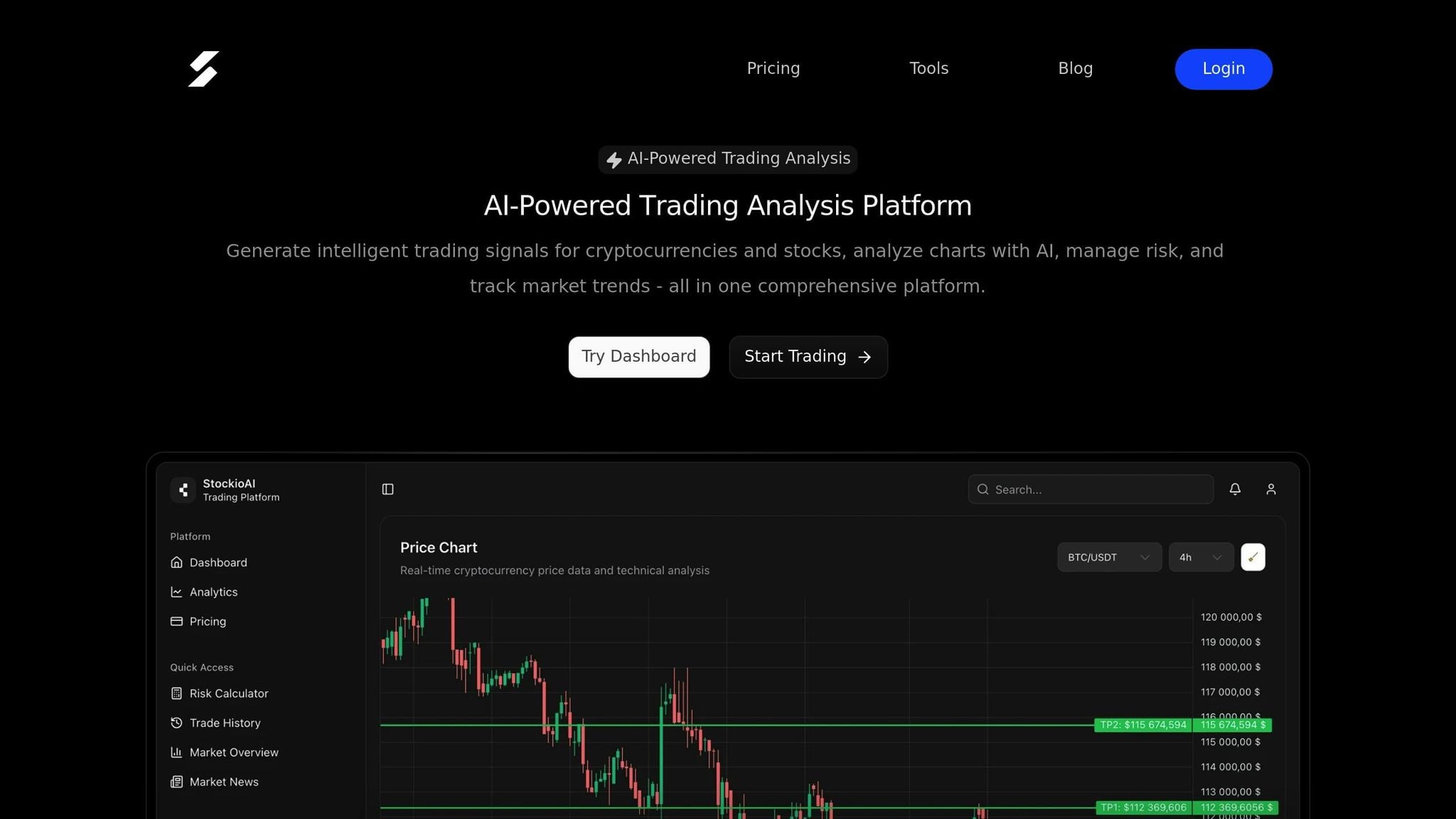

Crypto platforms live and die by speed. When trading signals are delayed even by milliseconds, profits are lost, and user trust erodes. This case study examines how a cryptocurrency platform completely revamped its data pipeline to process millions of data points per second with sub-second latency.

Key takeaways:

- Migrated to cloud-based infrastructure using AWS Kinesis, Google BigQuery, and Amazon Aurora MySQL, slashing latency by over 98%.

- Introduced early-stage data processing to validate and standardize data instantly, reducing unnecessary load by 40%.

- Automated monitoring and recovery systems ensured 99.99% uptime and zero data loss during market spikes.

- Performance upgrades cut operational costs by 66% and improved system throughput by 1000%+.

These changes not only improved speed but also enhanced user experience with faster dashboards, real-time trading signals, and reliable analytics.

Designing a data pipeline for a crypto trading system (bot) using Python and Google Cloud

Original Data Pipeline: Structure and Problems

The platform's original data infrastructure struggled to keep pace with the rapid demands of the cryptocurrency market. It relied on a patchwork system of Python scripts and systemd to gather trading data from various exchanges. However, as data volumes surged, this setup quickly became a bottleneck.

Data Sources and Connection Problems

The data pipeline pulled information from multiple exchanges, utilizing REST APIs for historical data and WebSocket streams for real-time updates. Each exchange had its own unique formats, update intervals, and protocols, which made integration a challenge. Custom parsing and rate limiting were necessary but often led to dropped WebSocket connections, resulting in data gaps.

Without standardized data schemas, every price update had to go through several layers of validation and manual field mapping. This increased the likelihood of errors and made maintaining the system even harder as exchanges updated their APIs.

These connection issues became especially problematic during peak market activity, causing significant slowdowns.

System Slowdowns and Performance Issues

The pipeline’s limitations became glaringly obvious when market volatility spiked. Sequential processing and single-threaded validation caused backlogs during high-traffic periods. Adding to the strain, each data point required an individual database transaction, further slowing response times and consuming memory.

On top of that, CPU-intensive validation processes clashed with real-time analytics, worsening performance just when the system was under the most pressure.

These bottlenecks didn’t just hurt performance - they also compromised data quality and user experience.

Effects on User Experience and Data Analysis

The delays in processing trading signals and displaying dashboard updates had a direct impact on users. Trading signals were often late, and dashboards sometimes displayed outdated market charts, especially during periods of high activity. This not only reduced the accuracy of AI-driven insights but also eroded user trust in the platform.

Fixing these issues was critical to building a pipeline capable of handling real-time, high-frequency trading demands.

Improvement Methods and Technical Solutions

The overhaul of the infrastructure didn’t rely on patchwork fixes. Instead, the team introduced three transformative strategies that completely restructured how data moved through the platform. These changes not only improved system performance but also laid the groundwork for future upgrades.

Moving to Scalable Cloud Systems

One of the most impactful changes was the migration to cloud-based infrastructure. AWS Kinesis became the core of real-time stream processing, effortlessly handling millions of data points per second with extremely low latency.

To handle data storage and transformation, Google BigQuery was integrated, offering the computational muscle to process both real-time and historical cryptocurrency data. For database operations, Amazon Aurora MySQL was adopted, eliminating the transaction bottlenecks that plagued the old system.

This move to the cloud delivered significant results. Similar implementations have shown a 98% reduction in data processing delays, an 80% cut in deployment and maintenance expenses, and a 66% drop in overall operational costs.

Additionally, AWS ECS enabled containerized processing, allowing the system to automatically scale in response to market activity. During periods of high trading volatility, extra containers would spin up within seconds to absorb the load, then scale back down once activity returned to normal levels.

Processing and Filtering Data Earlier

The new architecture prioritized early-stage data processing, transforming raw exchange data as soon as it entered the system. Instead of saving everything for later, the pipeline now validates, standardizes, and filters data right at the start.

Custom validation rules streamlined this process by automatically converting various timestamp formats, price units, and trading pair structures into a unified format. This eliminated the manual field mapping that previously caused delays and errors.

To handle problematic data, dead letter queues were introduced. These queues automatically redirected invalid data for later analysis and reprocessing, keeping the main data flow clean and uninterrupted.

The system also included duplicate detection algorithms, which identified and removed redundant price updates in real-time. By filtering out unnecessary data within milliseconds, the downstream processing load was reduced by up to 40% during peak trading periods. This efficiency allowed StockioAI’s platform to process over 60 real-time data points per second, including technical indicators, market sentiment, and whale movements, all of which feed into its AI-driven trading signals[1].

Adding Automation and System Monitoring

Automation and monitoring were critical to ensuring the system’s reliability. Using Infrastructure as Code (IaC) with Terraform, the team created reproducible deployments, while custom dashboards tracked metrics like data ingestion rates, processing latency, and API response times. Automated alerts flagged any anomalies, ensuring rapid response to potential issues. All infrastructure changes were version-controlled, enabling quick rollbacks when necessary.

Google’s 2023 upgrade of its Bitcoin ETL pipeline showcased the benefits of automation. By leveraging GitOps workflows with Kubernetes, they built a fully reproducible system capable of syncing the entire Bitcoin blockchain in under 24 hours[3]. This approach ensured consistent updates across development and production environments.

StockioAI implemented additional measures, such as automated retry mechanisms with exponential backoff for external API calls. When exchange APIs temporarily failed, the system automatically retried requests with increasing delays, preventing widespread failures.

The monitoring system also employed anomaly detection algorithms to identify unusual patterns in data. This helped catch potential issues, such as changes in exchange APIs or network disruptions, before they could affect trading signals or user experience.

To further enhance reliability, checkpointing mechanisms were added throughout the pipeline. These recovery points allowed the system to resume processing from the last stable state after interruptions, ensuring no data was lost during updates or unexpected outages. This approach helped maintain the platform’s 99.99% uptime, a critical requirement for real-time trading decisions.

Scale Testing and Performance Results

Testing Methods and Process

To ensure the system could handle the demands of real-world market conditions, the team conducted a series of rigorous tests. Chaos engineering experiments simulated scenarios like API outages, sudden spikes in network latency, and data surges during volatile market periods.

The testing process included burst simulations to replicate volatility spikes, parallel processing validations to maintain consistent data ordering, and latency measurements at various stages of the pipeline. These tests focused on several critical areas: handling WebSocket streams, validating REST API endpoints, ensuring real-time OHLCV data accuracy, and measuring latency down to the millisecond.

Tools like Apache JMeter and custom-built load generators were employed to simulate the heavy loads and data bursts typical of exchange APIs [2]. The team also tested failover mechanisms and data loss prevention strategies, understanding that even brief disruptions could have a serious impact on trading decisions in fast-paced cryptocurrency markets.

Historical events, such as flash crashes and scheduled maintenance windows, were recreated to stress-test the system. These tests verified that data ordering and consistency remained intact under extreme conditions, laying the groundwork for the performance improvements detailed below.

Performance Numbers and Improvements

The optimized pipeline delivered dramatic improvements across the board, achieving sub-second latency - a huge leap from the previous delays of several seconds.

Throughput capacity skyrocketed, with the system now capable of processing millions of data points per second, compared to just thousands previously. These optimizations also had a significant impact on cost efficiency. Deployment and maintenance expenses dropped by over 80%, while operational costs were reduced by 66%. Additionally, the time required to create new data pipelines was slashed by 80%; tasks that once took two people four hours now required just one person and 30 minutes.

| Performance Metric | Before Optimization | After Optimization | Improvement |

|---|---|---|---|

| Data Processing Latency | 3–5 seconds | < 500 milliseconds | Over 98% reduction |

| Throughput Capacity | Thousands/second | Millions/second | 1000%+ increase |

| Pipeline Creation Time | 4 hours (2 people) | 30 minutes (1 person) | 80% reduction |

| Operational Costs | Baseline | 34% of original | 66% decrease |

| System Uptime | 99.5% | 99.99% | 0.49% improvement |

The system maintained a remarkable 99.99% uptime since its deployment, with no data loss recorded - even during periods of intense market volatility.

How This Improved StockioAI's Performance

These performance upgrades had a direct and transformative impact on StockioAI's trading capabilities. Signal generation latency dropped to under 500 milliseconds, enabling near real-time trading signals. With faster processing, StockioAI could analyze a greater number of trading pairs without sacrificing accuracy.

The improved speed also enhanced the platform's ability to handle technical indicators, market sentiment analysis, and whale movement detection. The availability of complete, real-time market snapshots allowed the AI to generate more precise and timely trading recommendations.

From a user perspective, the experience improved dramatically. Dashboards loaded faster, charts were more responsive, and alerts were delivered almost instantly. Even the risk calculator, tasked with processing complex scenarios, completed its calculations in milliseconds instead of seconds.

The cost savings from the optimized system allowed StockioAI to introduce advanced analysis features without increasing operational expenses. This scalability meant the platform could support more users simultaneously while maintaining a high-quality experience, aligning perfectly with the company’s growth goals.

Perhaps most critically, the end-to-end latency - from receiving exchange data to notifying users - dropped to under one second during normal market conditions. This improvement proved invaluable during volatile market movements, where every second can make a significant difference.

Key Takeaways and Practical Guidelines

To ensure your crypto data pipelines remain scalable, reliable, and secure, here are some practical strategies to consider.

Building Flexible and Expandable Systems

When designing data pipelines, modular architecture is a game changer. Instead of creating rigid, monolithic systems that demand complete overhauls for updates, aim for loosely connected components. This way, individual parts can be updated or replaced without disrupting the entire system.

Cloud-native tools like AWS Kinesis, Google BigQuery, and Kubernetes are particularly useful. They allow for automatic scaling, which is critical during unpredictable market surges. For example, during sudden spikes in trading volumes, these tools can adjust resources dynamically - no manual intervention needed.

To maintain consistency and avoid costly rework, use version-controlled infrastructure tools like Terraform. This ensures environments align with the desired state and makes rolling back changes a breeze when problems arise. Such flexibility is invaluable as new exchanges, trading pairs, or analytics requirements come into play.

Your data models also need to be adaptable. They should handle new fields or data sources without breaking existing workflows. Regularly reviewing your pipeline can help identify bottlenecks and ensure it’s ready to support new analytics features as they’re introduced.

System Monitoring and Backup Plans

In crypto trading, even a brief system disruption can have major financial consequences. That’s why comprehensive monitoring is non-negotiable. Real-time tracking of latency, resource usage, and anomalies helps maintain system health and ensures data flows smoothly through the pipeline.

Effective monitoring should cover several key areas:

- Latency: Monitor how quickly data moves through the system.

- Throughput: Keep an eye on the system’s capacity to handle data.

- Error Rates: Identify and fix issues before they escalate.

- Data Quality: Ensure the integrity of the data being processed.

By implementing automated monitoring and recovery processes, you can achieve near-perfect uptime (99.99%) and prevent data loss, even during volatile market conditions. Automated failover mechanisms can address processing errors on the fly, ensuring seamless operations. Redundant storage across multiple locations further minimizes the risk of data loss, while regular automated backups provide reliable recovery points.

Another critical piece is having clear runbooks and documentation. These resources guide teams during incidents, and regular testing of recovery procedures ensures they’re effective when real problems arise. Investing in disaster recovery planning pays off, especially during unexpected market stress.

Data Security and Privacy Protection

Handling sensitive data in the crypto space requires a robust, multi-layered security approach. End-to-end encryption, such as AES-256, keeps data secure during transit, storage, and processing. On top of that, role-based access controls ensure only authorized personnel can access specific data.

Audit logs are another essential tool. They track all data access and modifications, creating a detailed forensic trail. This is especially important for compliance with regulations like the Gramm-Leach-Bliley Act, which governs financial data privacy in the U.S.

To prevent security breaches, use secure parameterization for database connections and proper secrets management. Conduct regular security reviews and penetration testing to identify potential vulnerabilities before anyone else does.

Adopting a least-privilege access policy ensures that individuals and systems only access the data they absolutely need. Regularly review permissions to keep up with evolving team roles and responsibilities. Additionally, detailed audit logs and strong encryption practices help maintain compliance with U.S. financial data regulations.

For platforms like StockioAI, these security measures are essential. They safeguard trading signals, market analytics, and risk management tools, ensuring traders can rely on accurate and secure data for their decisions.

Conclusion

This case study highlights how targeted technical upgrades turned bottlenecked pipelines into high-performance systems, driving measurable business outcomes. By addressing connection issues and system slowdowns, StockioAI achieved sub-second latency and 99.99% uptime[2], showcasing the power of a thoughtful and well-executed technical strategy.

The benefits are undeniable. Industry examples show that similar migrations often lead to lower costs and faster scalability - key advantages in a competitive landscape where every millisecond counts.

For StockioAI, these advancements translate into faster, more precise AI signals, real-time dashboards, and timely risk alerts. In trading, the difference between delayed data and instant analytics can determine whether a trade is profitable or a missed opportunity.

The shift to a cloud-native, containerized architecture replaced rigid legacy systems, enabling seamless scaling and reliable performance during trading surges. Automated scaling ensures systems stay responsive, even under the pressure of volatile market conditions and surging trading volumes.

This case study underscores the value of early investments in scalable infrastructure. Instead of waiting for performance issues to escalate, successful platforms build systems designed to evolve alongside their user base and market demands. Features like comprehensive monitoring, automated recovery capabilities, and strong security measures provide a solid foundation for sustained growth.

In the fast-paced, 24/7 cryptocurrency market, reliable data pipelines aren’t just helpful - they’re essential. Platforms that effectively tackle these technical challenges, as StockioAI has done, are better equipped to deliver the real-time analytics and insights traders demand. For StockioAI, adopting these strategies ensures their AI-driven tools remain competitive, paving the way for continued progress in AI-powered trading.

FAQs

How does moving to a cloud-based infrastructure enhance the speed and reliability of cryptocurrency data pipelines?

Migrating to a cloud-based infrastructure can greatly enhance the speed and dependability of cryptocurrency data pipelines. This is largely thanks to the scalability and performance capabilities that cloud services offer. With cloud platforms, resources can be adjusted dynamically in real time, allowing systems to handle surges in data traffic during peak trading hours or sudden market activity without sacrificing performance.

Cloud-based systems also come equipped with redundancy and failover mechanisms, which keep data pipelines running smoothly even if unexpected hardware failures or outages occur. This level of reliability is crucial in the high-stakes environment of cryptocurrency trading, where downtime can lead to significant losses. By focusing on speed and stability, cloud infrastructure enables platforms to process data more efficiently, provide real-time insights, and deliver a better overall experience for users.

What are the main advantages of incorporating early-stage data processing into a cryptocurrency platform's data pipeline?

Implementing early-stage data processing in a cryptocurrency platform’s data pipeline brings some clear advantages. For starters, it boosts the pipeline's performance and efficiency by handling raw data closer to its source. Tasks like filtering, transforming, and organizing data at this stage help cut down the amount of information that needs to be processed later, saving both time and computational power.

Another big win is speed. Early-stage processing allows platforms to generate critical insights - like trading signals or market trends - much faster. In the fast-moving world of cryptocurrency, where decisions often need to be made in seconds, this speed can make a huge difference.

By streamlining data management from the beginning, platforms can offer users more dependable and actionable analytics, ultimately enhancing the trading experience.

How does automated monitoring and recovery ensure high uptime and prevent data loss in cryptocurrency trading systems?

Automated monitoring and recovery are essential for keeping cryptocurrency trading systems running smoothly. These tools constantly observe system performance, spotting unusual behavior in real time. By catching potential problems early, they can step in to prevent downtime and avoid disruptions that might otherwise result in data loss.

On top of that, automated recovery systems quickly bring operations back online after unexpected failures. This ensures that critical data pipelines stay intact, safeguarding valuable trading information. With accurate, up-to-date data always available, traders can continue making well-informed decisions without interruption.