AI is reshaping trading platforms, powering faster execution, risk analysis, and fraud detection. However, this progress creates a conflict: safeguarding systems from cyber threats (security) while meeting regulatory demands for transparency (compliance). Both are critical, but they often clash.

Key takeaways:

- Security risks: Include data breaches, adversarial attacks, and model tampering. These can lead to financial losses, stolen data, and operational disruptions.

- Compliance risks: Focus on adhering to laws like SEC rules, ensuring transparency, and keeping proper records. Failures here result in fines and reputational damage.

- Real-world examples: Firms like Two Sigma and Interactive Brokers faced millions in fines for algorithm flaws and compliance lapses in 2024-2025.

- Balancing both: Requires strong governance, real-time monitoring, and collaboration across teams to address overlapping risks.

This article explores how trading platforms can manage these challenges effectively, ensuring both secure operations and adherence to regulations.

3000: Vanta - The AI Advantage in Security and Compliance

Security Risks in AI Trading Platforms

AI trading systems face a trio of security threats that jeopardize both the safety of sensitive data and the trust of users. Despite 85% of organizations incorporating AI into their operations, security measures often lag behind this rapid adoption, leaving exploitable vulnerabilities in their wake [6]. These issues highlight the complex challenges AI trading platforms must navigate to ensure secure operations.

Data Breaches and Unauthorized Access

Trading platforms handle a wealth of sensitive data, including personal information and trading strategies. Cybercriminals often exploit this by employing techniques like membership and attribute inference attacks. These methods allow attackers to determine whether specific data was part of the training set or even extract sensitive details directly from AI model outputs [6]. Centralized data storage, such as data lakes, amplifies the damage of a breach. Without robust encryption and strict access controls, client information and proprietary strategies are left vulnerable [6].

Adversarial Attacks on AI Models

Adversarial attacks target AI systems by feeding them manipulated input data, causing the models to make erroneous predictions. In 2024, researchers uncovered a striking example: attackers exploited Slack's AI features with prompt injection attacks. By embedding malicious instructions into uploaded files, they tricked the AI into leaking sensitive information from private channels and generating phishing links [6]. These evasion attacks can lead to unintended trades, which traders can model using a crypto trading signal simulator to understand potential outcomes, while data poisoning during training corrupts the model’s logic by introducing harmful data into the training set [6]. The opaque nature of machine learning models - often referred to as "black boxes" - makes these attacks even more hazardous. As FINRA notes:

"Some ML models... are described as 'black boxes' because it may be difficult or impossible to explain how the model works (i.e., how its predictions or outcome are generated)" [1].

These manipulations distort market signals and increase systemic risks, making it harder for a crypto market trend analyzer to provide accurate insights, creating a ripple effect across trading operations.

Model Tampering and Integrity Risks

The integrity of AI models is another critical concern. Unauthorized modifications can compromise trading decisions, eroding the platform’s reliability. Attackers may make undetectable functional changes to models, while supply chain vulnerabilities arise from using compromised open-source datasets with inadequate security measures [6]. Additionally, the rise of "shadow AI" - unauthorized AI tools used by employees - introduces unmonitored risks. Such tools can expose proprietary data to third-party models, creating compliance and security headaches [6]. Auditing these complex models is no easy task, as Wiz explains:

"The less you understand AI logic, the harder it is to perform testing, which leads to reduced trust and an increased risk of exploitation" [6].

These integrity risks underscore the importance of thorough oversight and robust security protocols.

| Vulnerability Type | Impact on Data Protection | Impact on User Trust |

|---|---|---|

| Prompt Injection | Exposure of private channel data and PII | High; enables deceptive phishing schemes |

| Data Poisoning | Corrupts the integrity of training datasets | Reduces reliability of trading decisions |

| Inference Attacks | Leakage of sensitive individual attributes | Erodes privacy guarantees |

| Shadow AI | Proprietary data exposed to third-party models | Creates unmonitored compliance risks |

| Model Evasion | Manipulation of trading outputs/predictions | Leads to unexpected financial losses |

Compliance Risks in AI Trading Platforms

Technical security measures might safeguard systems, but compliance ensures those systems operate within regulatory boundaries. Unlike technical vulnerabilities, compliance risks draw direct regulatory scrutiny. U.S. regulators emphasize that their rules are "technology neutral", meaning the same obligations for supervision, recordkeeping, and risk management strategies apply to AI systems as they do to manual processes [5]. This creates a tough challenge for platforms, which must demonstrate that their AI tools meet regulatory standards originally designed for human decision-makers.

Regulatory Oversight and Requirements

AI trading platforms fall under the watchful eyes of the SEC, FINRA, and CFTC, each enforcing specific rules that demand rigorous oversight and documentation. For instance, FINRA Rules 3110 and 3120 require firms to establish supervisory systems "reasonably designed" to comply with securities laws [1]. The stakes for non-compliance are high. In early 2025, Two Sigma and Interactive Brokers faced substantial fines after algorithmic changes bypassed internal controls, exposing weaknesses in monitoring [5]. These cases highlight a critical reality: platforms must maintain thorough oversight of every AI model from its development to deployment.

Transparency in AI Decision-Making

One of the most significant hurdles in compliance is the opaque or "black box" nature of many machine learning models. When a platform cannot clearly explain how an AI model makes decisions, it becomes nearly impossible to ensure those decisions comply with securities laws - a core requirement under FINRA Rule 3110 [1]. Former SEC Chair Gary Gensler raised concerns about potential conflicts of interest in these systems:

"If the robo-adviser or the brokerage app is using a function… to optimize for its own interests... is it then communicating with investors because it will be good for their investment decisions, or because it might benefit the firm's revenues, profits, or other interests?" [3]

Without transparency, firms risk falling into "ex-post rationalization", where they attempt to justify AI decisions based on surface-level correlations rather than the model's actual logic. This lack of clarity also makes it difficult to detect demographic or historical biases in training data, potentially violating FINRA Rule 2010's requirement for "just and equitable principles of trade" [1]. The regulatory focus on AI is intensifying - evident in the SEC's creation of an AI Task Force in August 2025 and the appointment of a Chief Artificial Intelligence Officer to oversee AI governance [11]. This opacity not only complicates compliance but also undermines the reliability of audit trails, which is a critical issue discussed next.

Audit Trail Deficiencies

Regulations like Exchange Act Rules 17a-3 and 17a-4, along with FINRA Rule 4510, demand complete recordkeeping of AI-driven decisions [1]. When platforms fail to maintain proper audit trails, they are unable to demonstrate compliance during regulatory examinations. In 2024, Brex Treasury faced hefty penalties for inadequate recordkeeping related to its identity-verification algorithm [5]. This case underscores a key point: outsourcing AI functions to third-party vendors does not absolve firms of their compliance responsibilities. As FINRA states:

"Outsourcing an activity or function to a third-party does not relieve them of their ultimate responsibility for compliance with all applicable securities laws and regulations and FINRA rules." [1]

Poor monitoring and recordkeeping can obscure violations across a range of regulatory areas, from market manipulation to anti-money laundering lapses. These gaps may only become apparent during enforcement actions. This makes meticulous recordkeeping just as essential as robust technological safeguards for upholding market integrity.

Security vs. Compliance: Direct Comparison

When it comes to AI trading platforms, security and compliance risks might appear similar at first glance, but they address entirely different vulnerabilities and require unique strategies. Security risks revolve around technical threats, such as data breaches, adversarial manipulation of AI models, or unauthorized interference with trading algorithms. On the other hand, compliance risks focus on meeting legal and regulatory requirements. These include ensuring transparency in AI decision-making, addressing data bias in trading models, and maintaining complete audit trails. Understanding this distinction is crucial for grasping how these risks play out in daily operations.

The consequences of these risks diverge significantly. A security breach can lead to immediate technical issues, such as system downtime, theft of proprietary trading strategies, or direct financial losses from compromised accounts. Meanwhile, compliance failures often result in legal and regulatory penalties, even if the technical systems remain operational. These contrasting outcomes demand tailored mitigation strategies and resource planning.

However, these risks often intersect. For example, a data breach exposing customer information represents both a security issue (unauthorized access) and a compliance violation (breaching SEC Regulation S-P) [1]. Similarly, the use of "black box" AI models poses dual challenges: protecting the proprietary logic of these models is essential for security, yet regulators require explainability to ensure legal transparency [1]. These overlapping concerns highlight the need for a balanced approach that safeguards technical integrity while adhering to regulatory standards.

Comparison Table: Security Risks vs. Compliance Risks

The table below outlines the key differences between security and compliance risks:

| Feature | Security Risks | Compliance Risks |

|---|---|---|

| Core Focus | Protecting data integrity and system perimeter | Ensuring fairness, transparency, and legality |

| Key Threats | Data breaches, adversarial attacks, model tampering | Transparency gaps, data bias, audit trail deficiencies |

| Operational Impact | System downtime, theft of intellectual property, financial loss | Regulatory penalties, reputational damage, license loss |

| Mitigation Approach | Encryption, multi-factor authentication, SOC audits | Model Risk Management (MRM), explainability documentation, human review |

| Regulatory Examples | SEC Market Access Rule, NIST Frameworks | FINRA Rule 3110 (Supervision), SEC Regulation S-P |

To allocate resources effectively, platforms must recognize these distinctions. Security measures like penetration testing and access controls are essential for protecting technical systems. At the same time, compliance frameworks - such as maintaining model inventories and incorporating human-in-the-loop reviews - ensure operations remain within legal boundaries. As FINRA emphasizes:

"Firms' increasing reliance on technology for many aspects of their customer-facing activities, trading, operations, back-office, and compliance programs... exposes firms to technology-related compliance and other risks" [1].

How to Balance Security and Compliance in AI Trading

Striking the right balance between security and compliance in AI trading means creating systems where these two priorities work seamlessly together. One helpful framework to illustrate this is the "10/20/70 rule" - 10% reliance on algorithms, 20% on technology infrastructure, and 70% on people and culture. This rule highlights that technology alone can’t solve everything; human collaboration and oversight are just as crucial [15]. To achieve this, trading platforms need cross-functional teams that bring together data scientists, compliance officers, legal experts, and IT security professionals.

Governance Frameworks and Risk Management

A strong governance framework starts with oversight committees. These committees, made up of experts in legal, compliance, IT, and trading, should monitor AI systems throughout their lifecycle [12]. One key responsibility of these groups is to maintain a detailed inventory of all AI tools in use, documenting how each system makes decisions. This documentation not only helps with internal security but also ensures transparency for regulators. As Chris Stanley, founder of Beach Street Legal, aptly puts it, quoting ChatGPT: "With great algorithmic power comes great regulatory responsibility" [12].

Platforms should also adopt tiered risk management frameworks, like those outlined in the EU AI Act. These frameworks categorize AI systems by risk levels - unacceptable, high, limited, or minimal - and apply appropriate controls to each [13]. For instance, high-risk trading algorithms might require human oversight to allow manual intervention during unusual market conditions. This approach prevents system failures and ensures trades remain explainable and aligned with fiduciary responsibilities [12][14]. These governance practices also set the foundation for real-time threat detection.

Real-Time Monitoring and Threat Detection

Constant monitoring is a must for identifying both cyber threats and regulatory issues. AI-powered tools can flag unauthorized access attempts and detect abusive trading activities like wash trading or frontrunning. The Commodity Futures Trading Commission (CFTC) mandates that regulated entities "maintain compliance staff and resources sufficient to conduct effective audit trail reviews, trade practice surveillance, market surveillance, and real-time market monitoring" [2].

Despite the fact that 76% of organizations believe adversaries benefit more from generative AI than defenders [9], platforms can gain the upper hand by using precise AI frameworks. These systems leverage context-specific data to automatically detect, prevent, and address threats before they escalate. Features like "kill switches" can immediately halt trading when anomalies threaten market integrity or security [10]. Alongside internal measures, keeping a close eye on third-party vendors is another layer of protection.

Vendor Due Diligence and Third-Party Audits

Even when platforms rely on third-party tools, they remain accountable for ensuring security and compliance [2]. This makes vendor due diligence a critical step before signing contracts. Security teams should examine vendors' SOC reports, while compliance teams must confirm that vendors meet transparency requirements for regulatory explainability [1].

Ongoing third-party audits can further bolster security and compliance efforts. Annual evaluations should check for issues like bias in vendor training data, verify encryption standards, and ensure compliance with recordkeeping rules under Exchange Act Rules 17a-3 and 17a-4 [1]. In highly regulated industries, some platforms take additional steps by hosting proprietary trading models in Virtual Private Clouds (VPCs) or on-premises environments. This reduces exposure to public internet risks and meets stringent data residency requirements [13]. By combining these strategies, platforms can meet both security needs and regulatory demands.

Comparison Table: Mitigation Strategies for Security and Compliance

Here’s a quick look at some key strategies that address both security and compliance challenges:

| Mitigation Strategy | Security Benefits | Compliance Benefits | Implementation Example |

|---|---|---|---|

| Human-in-the-Loop | Prevents system failures during unusual market conditions | Ensures trades are explainable and fiduciary duties are upheld | Manual intervention for high-value trade blocks |

| Real-Time Monitoring | Quickly spots cyberattacks and suspicious activity | Identifies patterns like wash trading and market manipulation | Dashboards tracking model drift and trade anomalies |

| Private VPC/On-Premises | Reduces risk of data exposure to third parties or the public internet | Complies with strict data residency and privacy laws (e.g., GDPR) | Hosting trading models in isolated cloud environments |

| Vendor Audits | Confirms the security posture of third-party providers | Verifies adherence to recordkeeping and disclosure regulations | Annual reviews of vendor data and bias mitigation practices |

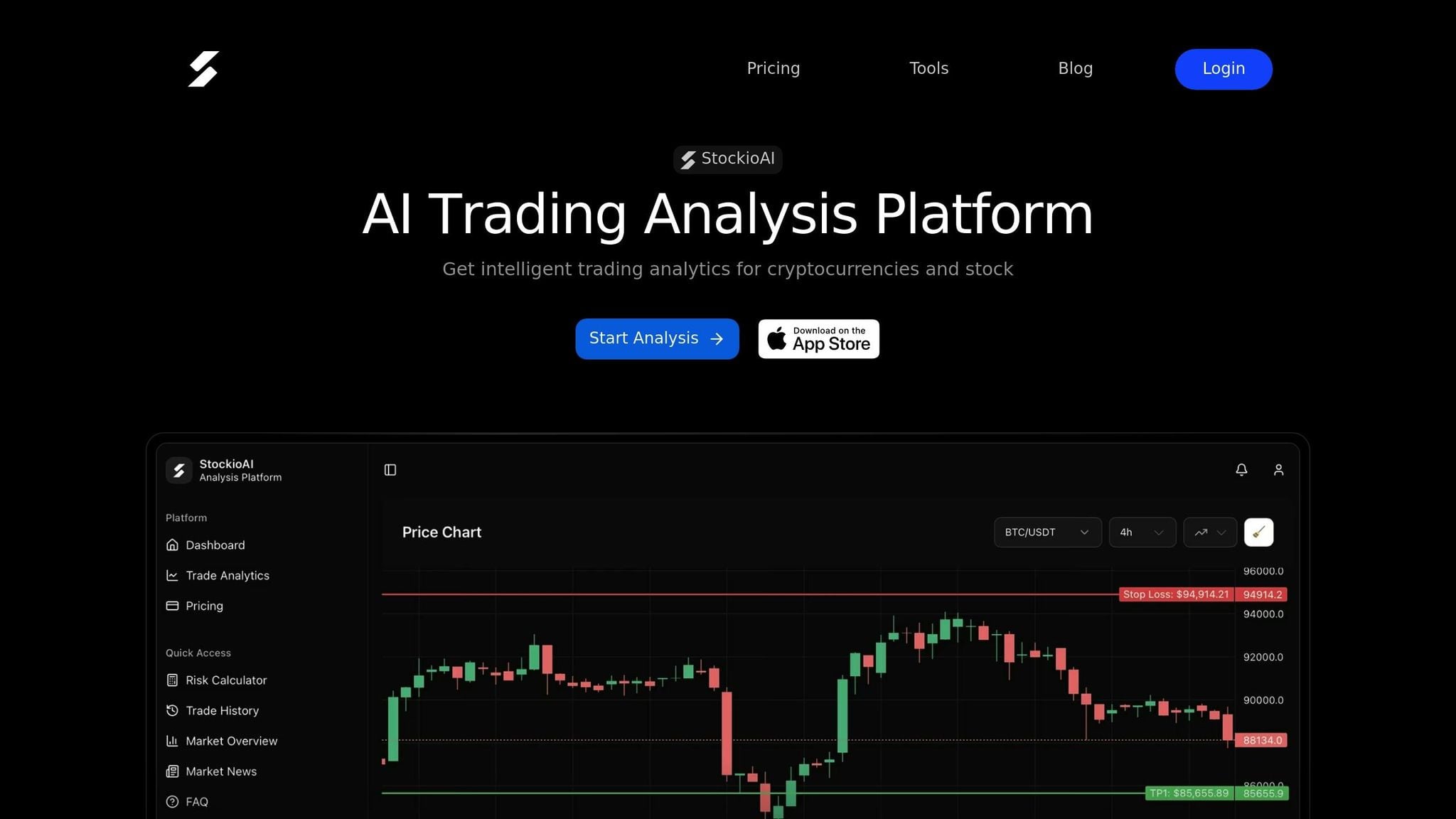

How StockioAI Addresses Security and Compliance

StockioAI tackles security and compliance head-on by embedding these priorities directly into its platform's architecture. It uses rigorous encryption, secure data storage, and strict access controls to protect trading data from unauthorized access or breaches [1][8]. Unlike public AI systems that may rely on external cloud storage, StockioAI operates as a proprietary and closed system, ensuring all client data remains within its secure environment [7]. This robust security framework sets the stage for its compliance measures.

To enhance compliance, StockioAI integrates Explainable AI (XAI) tools that provide transparency into trading decisions. Each trading signal is accompanied by a confidence score and interactive chart overlays, making the decision-making process clear. This empowers traders and compliance teams to audit decisions effectively and meet regulatory transparency requirements [1]. The platform's 7-Tier Priority System evaluates factors like market structure, volume, trend phases, and technical indicators, ensuring outputs are both consistent and explainable.

StockioAI also addresses regulatory requirements with tools like its Risk Calculator, which prevents over-leveraging by factoring in account balance, risk tolerance, and market volatility to determine appropriate position sizes. Its Multi-Timeframe Validation feature reduces false positives by comparing short-term and long-term trends, while the Conflict Resolution Matrix ensures consistent guidance when signals conflict. Together, these features align with supervisory standards under FINRA Rules 3110 and 3120 [1][5].

Real-time monitoring is another cornerstone of StockioAI's approach. The platform's Market Regime Classification identifies market conditions - whether trending, ranging, volatile, or quiet - allowing strategies to adapt dynamically. Anomaly detection flags unusual patterns that could indicate manipulation or security threats specific to the platform's operations [16]. Additionally, the Conflict Resolution Matrix, which manages 15 distinct scenarios for position sizing, ensures traders receive clear and consistent guidance, supporting both operational security and regulatory transparency.

Conclusion

Ensuring security and compliance in AI trading platforms isn’t just a regulatory requirement - it’s a fundamental part of running a successful operation. Agencies like the SEC, FINRA, and CFTC have made it clear: the same rules apply to AI as they do to any other technology. Firms are fully responsible for supervision, recordkeeping, and risk management, no matter how advanced or complex their algorithms become [5]. Recent enforcement actions, including hefty fines for lapses in oversight and algorithm failures, underscore the risks of falling short.

To address these challenges, integrating strong governance practices is a must. Effective AI trading systems rely on cross-functional governance that brings together business, technology, security, compliance, legal, and risk management teams. Firms should maintain a detailed inventory of their AI models, assign clear risk ratings, and rigorously test each model - ideally running them in parallel with legacy systems before full deployment. Moving away from opaque "black box" models toward Explainable AI (XAI) is also critical, as it allows compliance teams to audit and understand how decisions are made.

Another key strategy is keeping humans involved in high-risk decisions and having written fallback plans in place for system failures. As FINRA emphasizes:

"Outsourcing an activity or function to a third-party does not relieve them of their ultimate responsibility for compliance with all applicable securities laws and regulations and FINRA rules" [1].

With 77% of financial services executives identifying AI as critical to their strategies [4], the pressure to get this right has never been greater. The platforms that prioritize security and compliance from the very beginning - rather than treating them as afterthoughts - will be better equipped to navigate this complex landscape. By embedding these principles into their systems, firms can safeguard both their operational stability and the integrity of the markets they serve.

FAQs

How can AI trading platforms ensure both security and regulatory compliance?

AI trading platforms can strike a solid balance between security and compliance by combining advanced technical safeguards with strong governance practices. On the security front, platforms should employ encryption, multi-factor authentication, and continuous monitoring to protect sensitive user data and fend off cyber threats. Meanwhile, compliance efforts should focus on maintaining detailed audit trails, ensuring algorithm transparency, and adhering to U.S. regulatory standards set by bodies like the SEC, FINRA, and CFTC.

To meet these high standards, platforms can integrate tools such as real-time anomaly detection, risk management calculators, and model validation processes. These tools not only help detect and prevent market manipulation but also provide the necessary documentation for regulatory audits. For instance, platforms like StockioAI showcase how this balance can be achieved by offering features like AI-driven pattern recognition, dynamic risk calculators, and secure data encryption - all designed to build user trust while staying prepared for regulatory requirements.

What security risks are unique to AI-powered trading platforms?

AI-powered trading platforms come with their own set of security risks that extend beyond those faced by traditional systems. Among the most pressing concerns are prompt injection attacks, where harmful inputs trick AI models into revealing sensitive information or executing unauthorized trades, and data poisoning, where attackers tamper with training data to create biased predictions or cause trading errors. Another growing issue is shadow AI, which refers to employees using unauthorized AI tools that could unintentionally expose confidential market data.

Additional threats include AI-enabled fraud and manipulation, where adversarial outputs can distort market prices, and weaknesses in third-party tools or APIs, which hackers might exploit to introduce malicious code. There’s also the risk of model theft, where proprietary algorithms are reverse-engineered, jeopardizing both security and a company’s competitive edge.

Trading platforms like StockioAI tackle these challenges by implementing strong input validation, secure model training practices, continuous monitoring, and thorough third-party risk management. These measures help maintain security and compliance across the trading ecosystem.

Why is it important for AI in trading to be transparent for regulatory compliance?

In the world of AI-powered trading, transparency isn't just a nice-to-have - it’s a must to meet U.S. regulatory standards. Organizations like the SEC and FINRA require firms to clearly outline how their AI models operate. This includes explaining how buy, sell, or hold signals are generated, how risks are evaluated, and how potential conflicts of interest are managed. Transparent systems make it easier for regulators to ensure that AI isn’t being used to introduce bias, manipulate markets, or engage in unlawful activities.

For trading platforms, this means a strong focus on maintaining audit trails, offering clear documentation, and ensuring real-time explainability for every decision made. Platforms like StockioAI are stepping up to the challenge by delivering AI-driven analytics and trade signals paired with detailed rationales and risk metrics. This not only helps firms stay on the right side of regulations but also fosters trust among investors by promoting fairness and accountability in trading practices.